Neural Shaders

2023-04-13

Another post about things I've been playing around with, but just haven't written up about. "Neural Shaders" are likely to be a multi-post subject, because I find them so fascinating. Sure, they have their disadvantages and limitations, but for the game designers, I think there is a possibility for some interesting new games -- yes, not just visuals.

What's a Neural Shader?

I don't quite know yet. People have explored Ambient Occlusion with neural networks; probably the closest concept to this is style transfer, or real-time image editing. ControlNET is similar, but it is Diffusion-based and so not real time. A neural shader then, is a shader with the work of the algorithm being done by a neural network.

Why?

Three reasons. Firstly, some styles just can't be captured by the very algorithmic design process of shaders. There are some fantastic shader artists out there, but the most impressive shaders are usually the most realistic. But art goes beyond realism, and can fall into domains of the impressionistic and surreal. Here it is possible, but harder, to design an appropriate shader.

Second, maybe in the future this could be used to reduce the runtime of expensive shaders. I'm not a technical artists, so I don't know much about how expensive shaders can be, but I do know that they are a sequence of parallel computations. Neural networks are great for exchanging computation for memory. Applying them to shaders means we can potential make a slow shader real-time, and just take on a memory overhead. For example, the game Manifold Garden used many multi-pass shaders to get a very clean outline effect, even at long distances. There was a lot of optimization that went into this. What if, instead, we can speed that all up with a neural network?

And lastly, it just looks cool! I think even the version with the visual artifacts has it's own appeal, similar in some way to Dreams's painterly look.

How?

I'm going to leave the technical details for a future post. Instead, I want to focus on the how from a design perspective. First a basic scene render is setup. Because we are only using pixel colors for the mapping, the materials have to be chosen so that they best preserve a one-to-one mapping. This is hard, and even impossible for some designs if we want the result to generalize. Some more research needs to be done in this direction -- there are some promising directions.

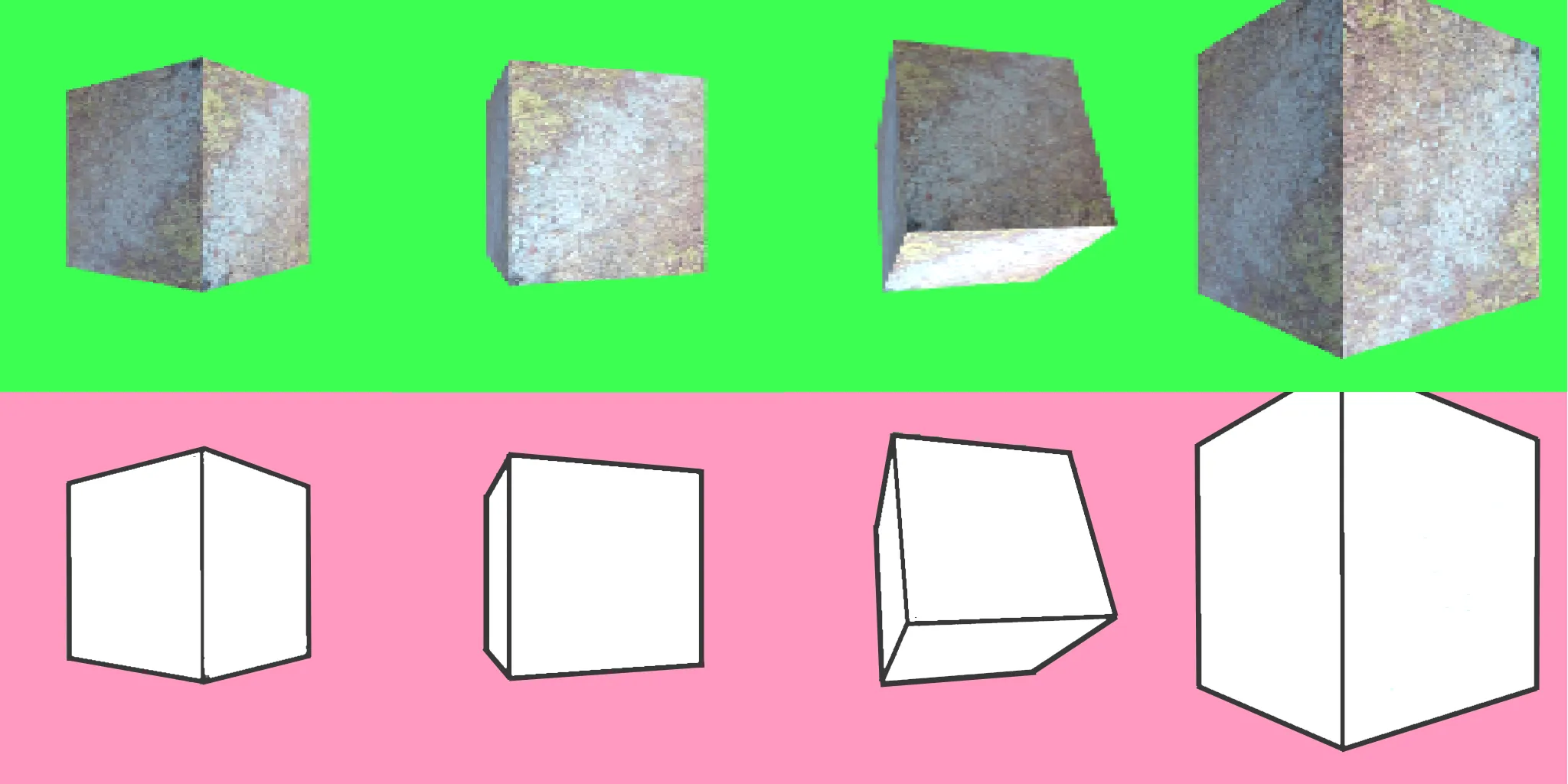

Here are some example of image pairs I have trained on:

Results.

Here I am playing around with the final results in real-time. I have sped the videos up by x4, but they run at 60 FPS. We can move the object in the basic scene, and the corresponding stylised image changes.

It is pretty cool, considering we only used a single image to train the model. With more images, it requires a good artist to preserve the coherency of the mapping, but the artifacts noticeably clean up.

What Else?

One fun application is to take advantage of our black-box. Why not perturb the weights and see what this looks like? Below I perturb the weights with varying sine waves:

There's lots more to try here: design the model w/ some latent space and navigate in that for more structured transformations. What if we blend the weights of two different Neural Shaders?

Conclusion.

I see Neural Shaders as not necessary a solution to a problem, but a new type of visual design. There are games within this space to explore: what about a Matrix-esque game where the player removes their 'Neural Glasses' and suddenly are show a reality far different from they one they occupy. Or a 'Drug Simulator' where the player ingests various chemicals, and the weights of the neural network are perturbed in different ways.

These are games I hope to explore one day. If I don't get around to it, I hope this short post might inspire someone else to give it a try.